Cybersecurity solutions

Cybersecurity breaches could result in over 146 billion records being stolen by 2023.*

What if you could leverage trust for your business advantage?

New digital landscapes, the move to cloud, the expansion of connected objects, quantum computing and the overall speed of digital transformation are changing the way we do business.

As a result, the surface attack is expanding and the threat landscape is evolving quickly, with the arrival of new and more sophisticated attacks.

Analysts predict that over 33 billion records will be stolen by cybercriminals in 2023 alone.

To follow or contact us:

![]()

Protecting your business to face cybersecurity challenges

With the cyber threat landscape evolving rapidly in an increasingly complex environment, cybersecurity has become one of the top business risks.

Are you prepared for the unexpected?

Discover how the six pillars of Eviden an atos business, help you face cybersecurity challenges.

NEW!

Discover the #EvidenDigitalSecurityMag:

Detect early. Respond swiftly.

ANTICIPATION AND PREPARATION

RESPONSE

The #1 in Europe and a global leader in cybersecurity

With a global team of over 6,000 security specialists and a worldwide network of Security Operation Centers (SOCs), Eviden offers end-to-end security partnership.

Our portfolio brings the power of big data analytics and automation to our clients for more efficient and agile security controls. Our six building blocks — all linked to analytics and automation — bring efficiency to our clients to better protect their critical data: personal data, intellectual property, financial data, and more.

Cybersecurity products >>

Discover our range of cybersecurity products

Cybersecurity Services >>

Discover our cybersecurity services

- Audit

- Consulting

- Integration

- Managed Security Services

![]()

Identities need to be protected to avoid breaches. You need to ensure the integrity of identities and access control of your company. The Eviden Trusted Digital Identities solution enables you to provide secure and convenient access to critical resources for business users and devices, while meeting compliance demands >>

![]()

With the number of connected objects growing exponentially, both IoT security and OT security are growing concerns that need to be addressed with a security by design approach >>

![]()

With the working environment becoming digital, we need to ensure security wherever people are located. With Eviden Digital Workplace Security solutions, you can securely work any time, from anywhere and from any device >>

![]()

Data Protection and Governance

The journey to cloud, IoT and OT security or digital workplace can only be started once we know the maturity level of the organization. One of the other key concerns while adopting those moves is the protection of sensitive data (HR data, IP data, financial data, customer data etc…) >>

![]()

Hybrid Cloud Security

Moving to the cloud is no longer a question of “if,” but “how.” How to move securely to the cloud and how to keep control of data (including sensitive data) while benefiting from the flexibility of the cloud

![]()

Advanced Detection and Response

Supervision and orchestration are key features needed for a 360° view of what’s happening in the organization – on-premises and in the cloud. Eviden Managed Detection and Response (MDR) brings you multi-vector threat detection and full-service response at remarkable speeds, leveraging our 16 SOCs >>

On the road to Zero Trust

“Zero Trust” cybersecurity is establishing itself in a cloud and mobile world where the information system is increasingly distributed and where users are both nomadic and homeworkers.

Leading the cybersecurity community

To reinforce our expertise and contribute to the development of our cybersecurity offerings, Eviden and our cybersecurity experts participate in numerous working groups and are members of several leading cybersecurity communities.

Eviden believes that innovative, collaborative end-to-end cybersecurity is a strong asset and a competitive differentiator for any organization.

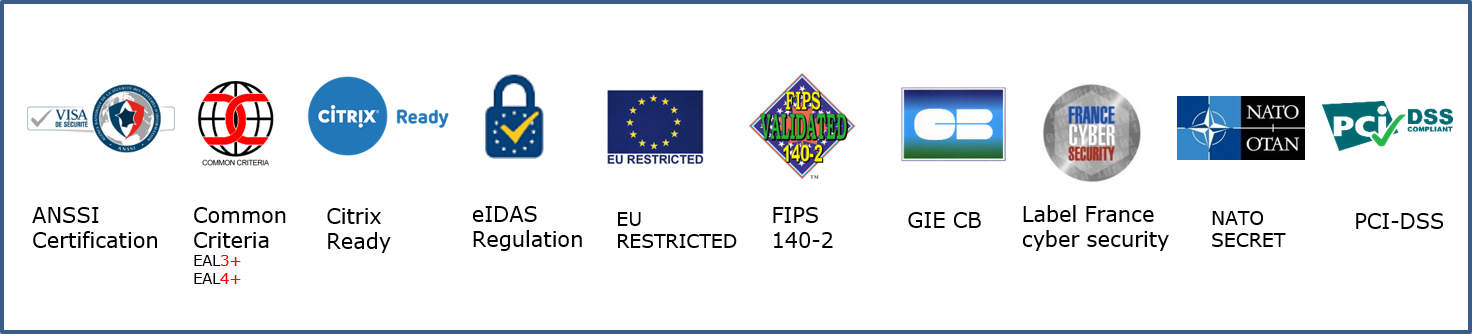

Our cybersecurity product qualifications and certifications

Hardware and software solutions for industries

In a world where cyberattacks are increasing in volume and sophistication, what cybersecurity solutions can be provided to meet the challenges specific to each industry?

Healthcare & Life Sciences

Deliver the right diagnosis for your security challenges and help clinicians and pharmaceutical workers focus on their core activity

Financial Services

Address cybersecurity challenges as part of banks and insurance digital business transformation

Public sector

Protect critical services and infrastructure with end-to-end digital security

Media

Secure media operations across the entire broadcast chain

Energy & utilities

Combine trust and compliance for the digitalization of production

Brochure: Tackling cybersecurity threats in the Utility industry >>

Brochure: Bringing trust and compliance in the age of digital transformation >>

Retail Transport Logistic

Improve security and accessibility of your customers

Manufacturing

Provide comprehensive digital security for the Industry 4,0

Telecom

Navigate through Telecom security and resiliency with a trusted security orchestrator for your networks

Our cybersecurity expertise

Related resources and news

Opinion paper

Forging a new digital security paradigm

This opinion paper aims to provide an engaging and informed view of the key challenges that will shape the future of digital security. It explores the main topics organizations should address to raise the bar in cybersecurity and win the cyber race.

Brochure

We created the Eviden Cybersecurity Tech Radar to help organizations identify the security technologies that can help them address cyber threats efficiently.